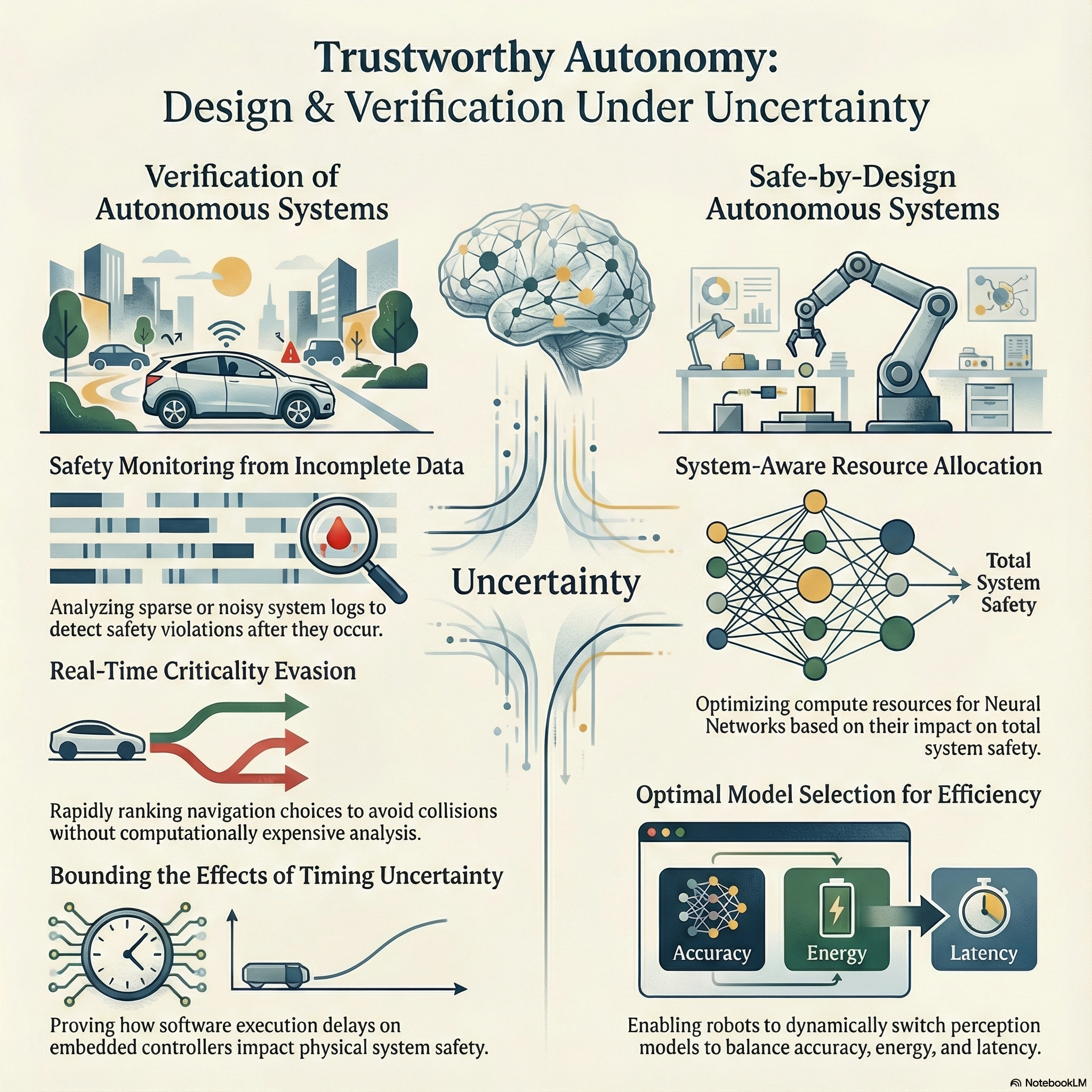

Trustworthy Autonomy - Design and Verification

My primary area of research is in design and verification of autonomous systems, which lies at the intersection of formal methods, real-time and embedded systems, and control theory. This research finds practical applications in diverse domains such as robotics, automotive, industrial, and home autonomous systems. My research primarily revolves around developing and verifying autonomous systems that guarantee performance and safety, establishing a high level of trust in their deployment, especially in real-world scenarios that involve critical safety considerations. This broad research area is can be referred to as Trustworthy Autonomy.

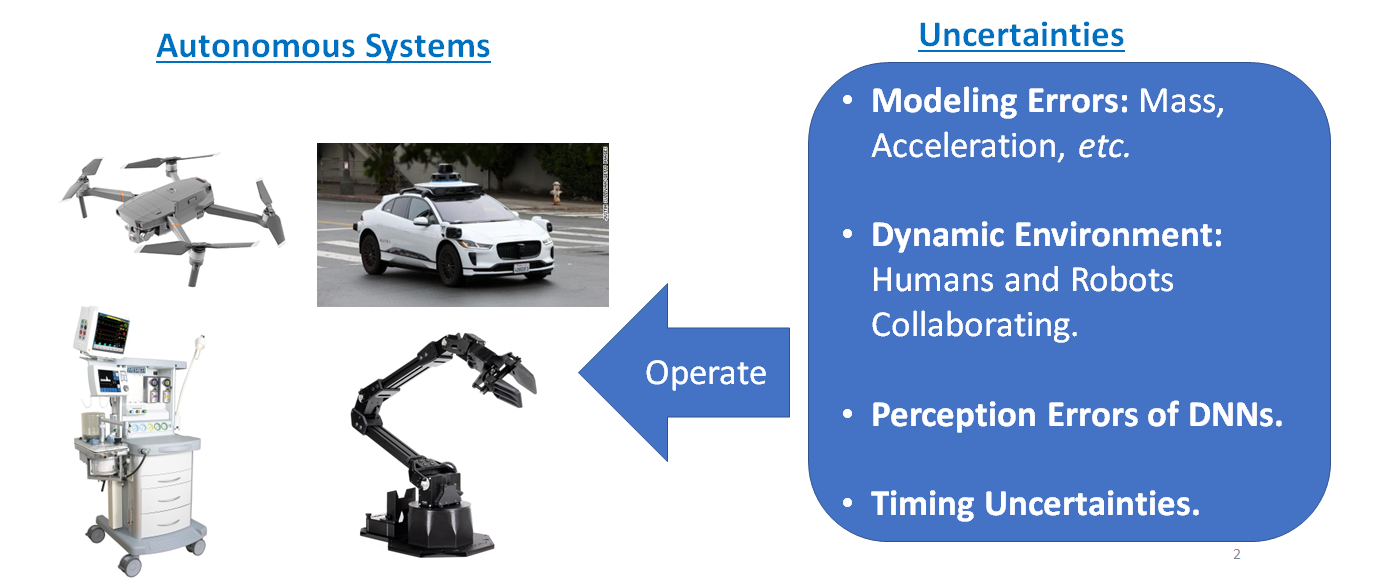

Autonomous Systems operate under a lot of uncertainties . . .

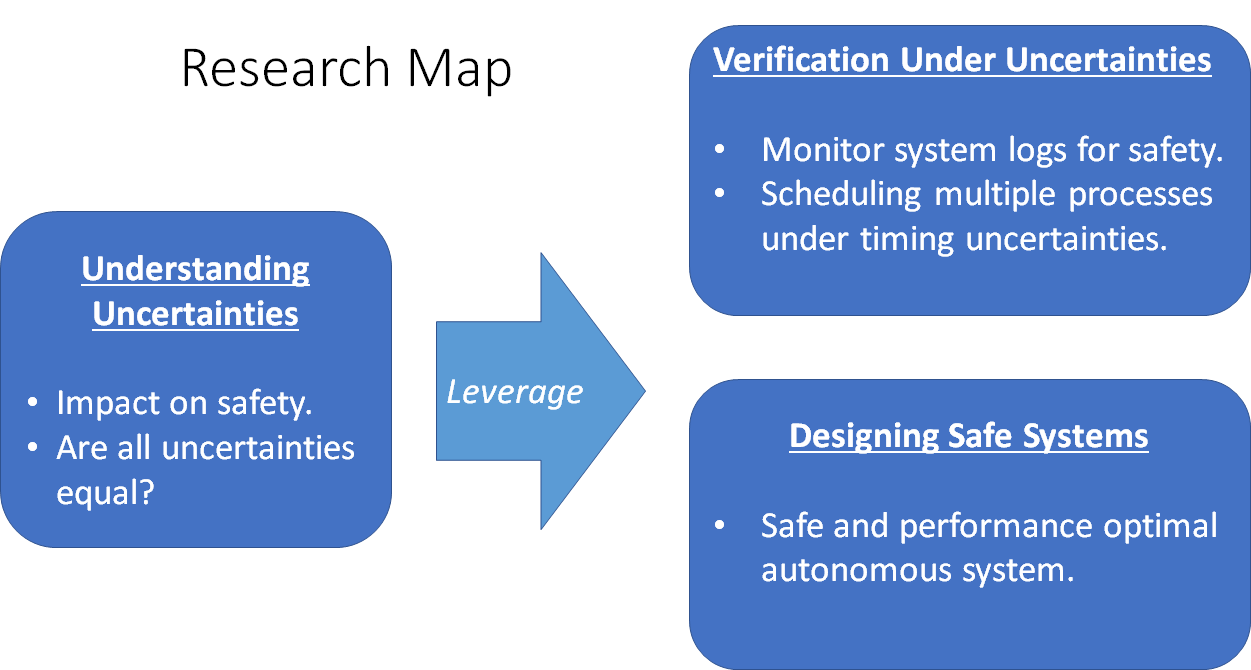

Broadly speaking, my research can be broken into the following three parts . . .

Broadly speaking, my research can be broken into the following three parts . . .

Understanding Uncertainties

The first part of the research involves creating artifacts to investigate and understand the characteristics and modeling of uncertainties.Formal verification of dynamical systems, such as autonomous vehicles, drones, spacecrafts, medical devices etc., often assumes that the system model is perfectly known, i.e., the model is free from uncertainties. But in reality, this is seldom the case—a dynamical system model is dependent on several parameters.

Let us consider the example of a robot operating on a factory floor, depicted in the above Fig. The robot’s objective is to navigate through the floor while avoiding collisions with a red wall. The robot’s model relies on a parameter called the coefficient of friction, which was assumed to have a fixed value during the modeling phase. Consequently, when developing the control design, the designed controller will operate according to this fixed coefficient of friction value. Once the controller is designed, it is typically subjected to verification to ensure correctness and safety. This means that the controller performs its intended task and does so without compromising safety, such as by avoiding collisions. It is important to note that this verification process is carried out on a model that assumes no uncertainties, specifically a fixed coefficient of friction. As a result, the controller is guaranteed to be correct and safe when operating with that fixed coefficient of friction value. However, due to an oil spill on the floor, the coefficient of friction has changed. In the previous traditional formal verification process, the robot’s behavior was assessed based on the assumption that the coefficient of friction remained constant (as used in the model). However, with the parameter altered by the oil spill, the previously established safety guarantee no longer holds. In particular, the set of states that the robot can reach, assuming the fixed coefficient of friction, is represented by the light blue region in the figure. Within this region, the robot exhibits no unsafe behavior, meaning it avoids colliding with the wall. However, due to the presence of the oil spill, the actual set of states that the robot can reach is now represented by the dark blue region, which includes the previously considered light blue region. As a result, this expanded region introduces the potential for unsafe behavior, namely colliding with the wall.

To address these concerns, I work on proposing the following:

(i) Techniques for verifying the safety and correctness of systems when faced with uncertainties.

(ii) Techniques for quantifying the permissible level of uncertainty that an autonomous system can handle while still maintaining safety.

(iii) Not all uncertainties are equal—some are more dramatic than others (with respect to safety).

Verification

Autonomous systems in safety-critical domains face challenges due to uncertainties, such as modeling errors and dynamic environments, hindering their reliability and autonomy. To instill trust, safety verification is crucial, ensuring system behavior remains within acceptable bounds despite uncertainties. Further, in safety- critical domains, provable safety verification is vital to prevent severe consequences. It enhances trust among stakeholders and assures the public, regulators, and end-users that autonomous systems can handle uncertain environments. My research addresses the need for provable safety verification, focusing on methodologies, algorithms, and frameworks to verify the safety of autonomous systems in uncertain environments. The goal is to understand the impact of uncertainties on system behavior and develop techniques for safe operation within predefined bounds.

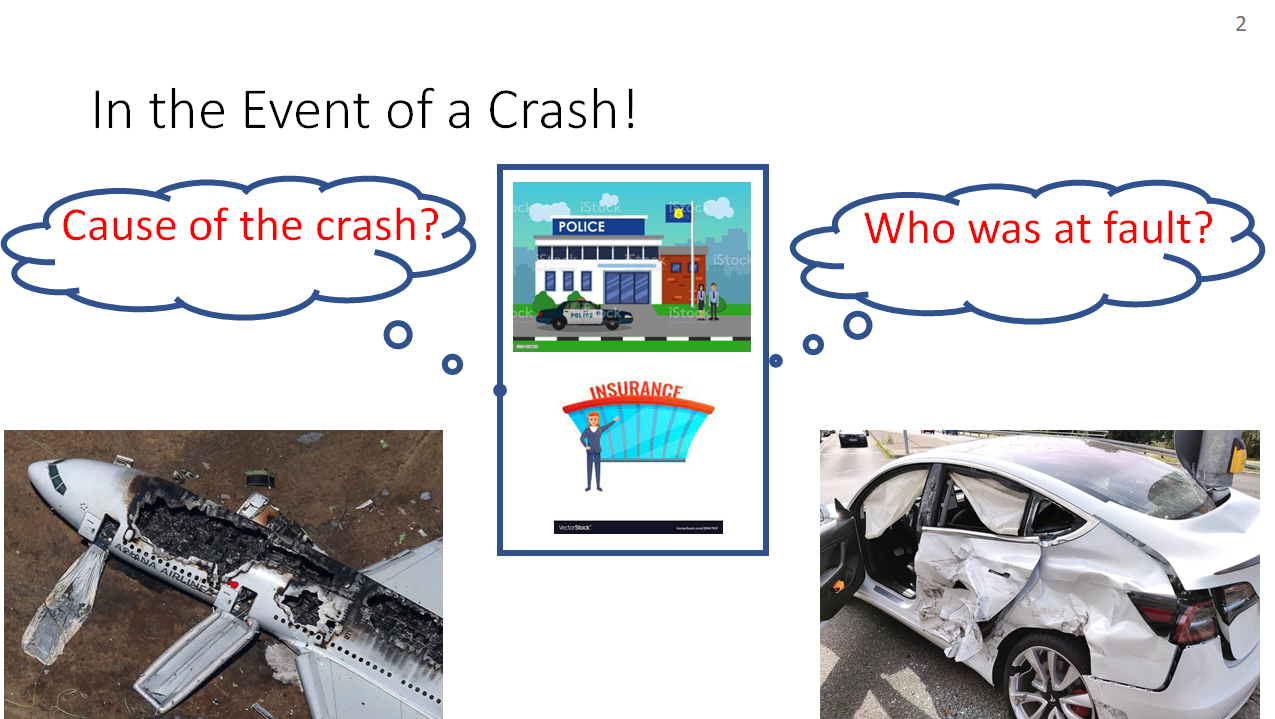

Monitoring Autonmous Systems - Analyzing logs to detect crashes!

Following a crash involving a plane or a vehicle, crucial questions arise from both law enforcement agencies and insurance companies: "What caused the crash?" and ``Who is at fault?"

To investigate these incidents, flights and vehicles are equipped with black boxes, devices that store critical information about the vehicle or plane leading up to the crash. These black boxes capture data such as controller inputs, voice recordings, and snapshots of state variables at specific time instants. However, accurately logging data at all time steps poses challenges for various reasons. The ability to log data accurately at every time step may be hindered by multiple factors. Systems designed on a tight budget often rely on uncertain or less accurate sensors for logging purposes. Additionally, logging data over an unreliable network can introduce uncertainties in the associated timestamps, leading to potential inaccuracies. In intermittently powered devices, allocating energy for continuous logging may not always be feasible.

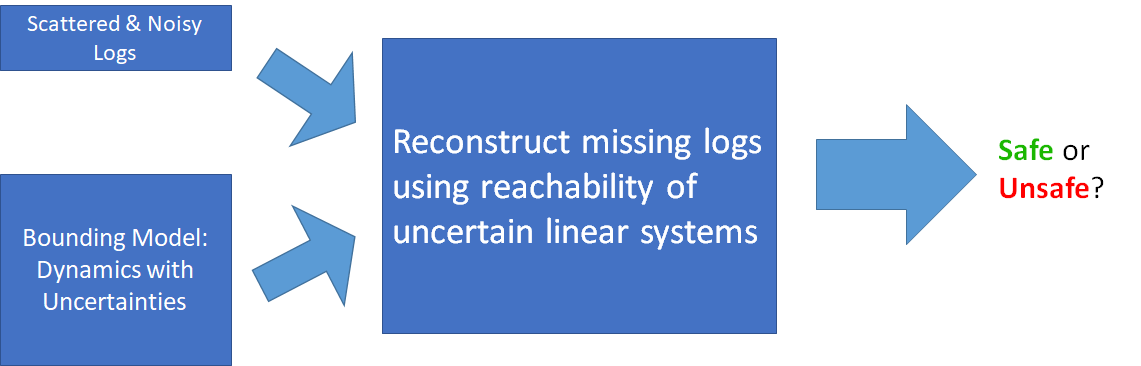

Consequently, the collected logs can exhibit noise and contain missing samples, complicating the analysis required for monitoring. To address these challenges, I work on proposing offline monitoring techniques capable of analyzing noisy logs with missing samples while accounting for uncertainties in timestamps. This offline monitoring approach aims to identify safety violations by thoroughly analyzing a given log.

Scheduling in Autonmous Systems - Handling workloads safely!

With the increasing complexity of autonomous systems, the implementation of multiple controllers on shared heterogeneous resources, such as multicore processors, GPUs, and FPGAs, etc., has become essential.

Consider the following example, as in the above figure, of a robot that is required to navigate along a predetermined path (black), avoiding obstacles (purple). For the overall functioning of the robot, it must operate multiple controllers on a single processor. These controllers include modules for perception, heat control, and a path follower, which directs the robot along its predetermined trajectory while avoiding obstacles. However, due to the simultaneous execution of multiple controllers on a shared processor, some controllers may fail to meet their deadlines, resulting in delayed computation of control inputs (i.e., deadline miss). In such a scenario, what happens if the path follower misses its deadline? The robot's trajectory can deviate from the planned path, potentially compromising safety by bringing it closer to obstacles (green and red). It becomes evident that not all patterns of deadline misses are equally safe. Deadline miss patterns that cause minimal deviation from the planned trajectory are considered safe (green), while patterns resulting in significant deviation and potential collisions are deemed unsafe (red). My research introduces techniques to prove the safety of autonomous system controllers in the presence of timing uncertainties.

Designing

Unlike verification, provable design provides a clear edge by allowing the creation of systems that are inherently safe. The focus in provable design is to develop safety systems right from the start, eliminating the need for additional post-design verification steps. This approach streamlines the process, saving valuable time and effort by seamlessly integrating safety measures into the design phase. As a result, it instills greater confidence in the overall safety of the system. My research introduces design paradigms that leverages on the unique characteristics of the systems and the uncertainties they encounter, leading to computationally scalable design algorithms.

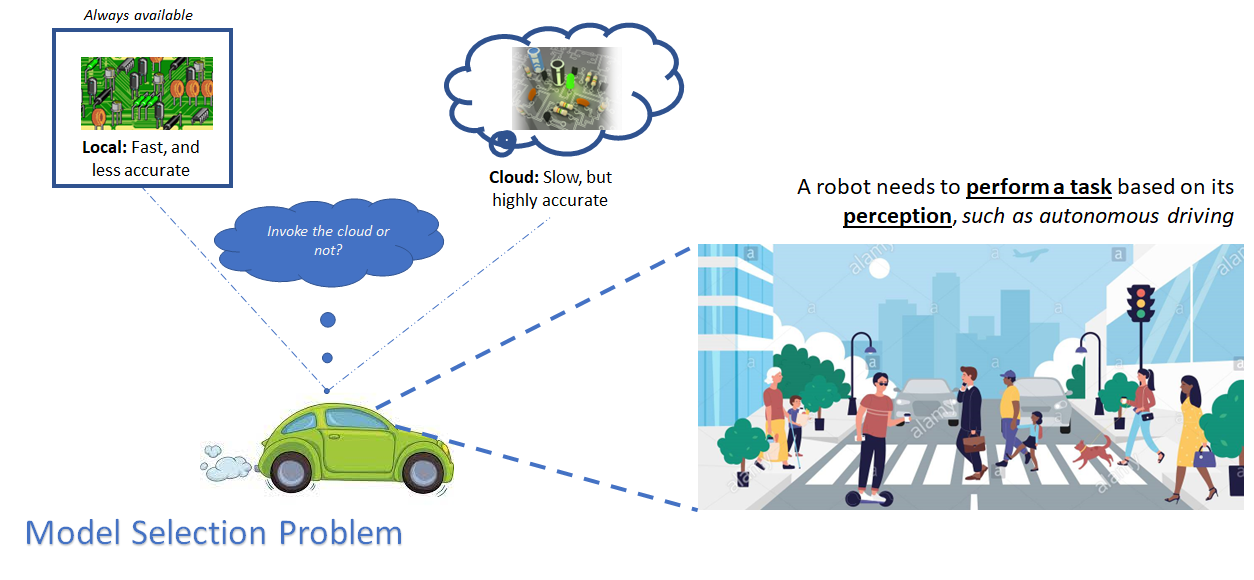

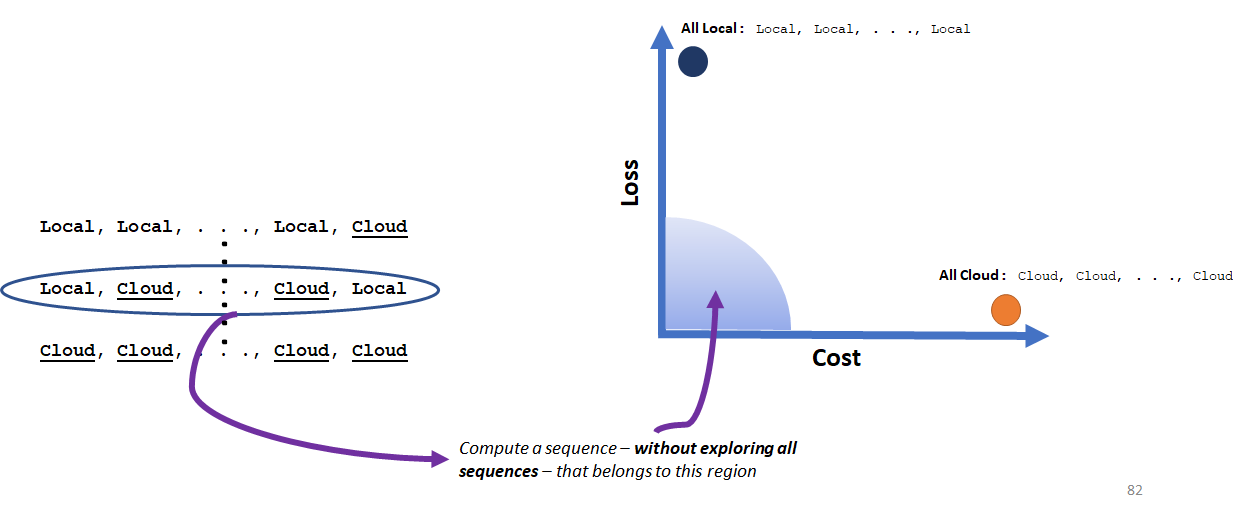

Consider a scenario where a robot needs to perform a task, such as autonomous driving, based on its perception. To aid in these tasks, the robot is equipped with a local perception model that is computationally efficient but has limited accuracy. Additionally, the robot has access to a cloud model that is highly accurate but is computationally extensive. The question that arises is whether the robot should use the cloud model at a given time step, depending on the situation.

If the robot were to invoke the cloud model at every time step, it would achieve high cumulative accuracy, bu this would come at a significant cumulative computational cost. On the other hand, relying solely on the local model for all time steps would result in a low cumulative computational cost but also a low cumulative accuracy. This could lead to potential unsafety. A smarter strategy in this context would involve selectively invoking the cloud model, especially during complex maneuvers, while using the local model for other instances. The goal is to strike a balance between low cumulative computational cost and high cumulative accuracy, ensuring completely safe navigation of the robot. This situation is commonly known as the model selection problem. My research proposes strategies that can determine an optimal sequence for invoking the models, enabling the robot to achieve this desired balance in a safe and provably optimal manner.

Trustworthy Autonomy not only entails a variety of technical challenges, but also many societal and philosophical ones—an aspect that I intend to study more in future.

Towards achieving this goal, my list of publications can be found here.

/autmn_lab/

/autmn_lab/